The Computer Revolution: From Room-Sized to Pocket-Sized

Apple launched Macintosh during the Superbowl XVIII in 1984 With a Groundbreaking Commercial

Computers are an integral part of life. People use them to communicate with people who are important to them at work and home. Many people look up information that they want to learn on a computer. Others watch movies, play games or entertain themselves using computers. From early morning to late night, people rely on computer systems to help them stay organized.

While it is hard for most people to imagine life without computers, it hasn't always been that way. Here's a look at how people developed computers and their operating programs. Along the way, meet some people who influenced their development. You'll discover how computers went from filling entire rooms to being small enough to fit in your pocket.

Steve Hayden, Brent Thomas, and Lee Clow conceived the idea to introduce the world to Macintosh through a television ad based on George Orwell's book "Nineteen Eighty-Four." The ad, directed by Ridley Scott (Alien, Blade Runner) featuring Anya Major as an unnamed heroine and David Graham as Big Brother, was first shown on 10 local channels during the last break before midnight on December 31. Still, most people saw the ad during Super Bowl XVIII on CBS.

In a keynote address, Steve Jobs described the ad as "It is now 1984. It appears IBM wants it all. Apple is perceived as the only hope to offer IBM a run for its money. Dealers initially welcoming IBM with open arms now fear an IBM dominated and controlled future. They are increasingly turning back to Apple as the only force that can ensure their future freedom."

World's First Electronic General-Purpose Computer Constructed at the University of Pennsylvania

The United States military commissioned John Mauchly and John Presper Eckert to build a new type of computer in 1943. The pair designed the Electrical Numerical Integrator and Calculator (ENIAC). The computer, constructed at the Moore School at the University of Pennsylvania, was housed in 40 9-foot-tall cabinets. It covered over 1,800 square feet and weighed more than 30 tons, thanks to its 70,000 resistors, 10,000 capacitors, 1,500 relays, 6,000 manual switches and 5 million soldered joints. The university installed two 20-horsepower blowers that constantly blew cold air to keep the machine from melting. It could do 5,000 additions, 357 multiplications, or 38 divisions in one second, but reprogramming the computer to work on a different problem took weeks. In addition, it often needed to be repaired.

University workers eventually dismantled the massive machine, but visitors can see parts of it on the University of Pennsylvania campus and another part at the Smithsonian.

Mathematician Charles Babbage Invents the Steam-powered Difference Engine

While Charles Babbage never built his steam-powered difference engine, it still laid the groundwork for the modern computer in 1822. Babbage envisioned a machine that used gears, levers and other mechanical components to do complex mathematical calculations. He was striving to create a machine for navigation logarithms for astronomers who guided ships at sea. He received money from the British government for the 25,000 parts he thought building the computer would take but needed more. Instead of being deterred, Babbage created a different plan that only required 8,000 parts. Even though Babbage never built the machine, Georg Scheutz used Babbage's design in 1854, but the machine broke down so frequently that it proved impractical.

After abandoning his idea for a steam-powered difference engine, Babbage built an analytic computer with a mill where it did the calculations, an area where answers could be stored, and a reader where users could enter information using punch cards and a printer.

Ada Lovelace Ignites the Computer Revolution With World's First Program, Envisioning Beyond Numbers

Ada Lovelace worked for Charles Babbage, whom she met in 1833 while creating the world's first computer program. Her job entailed translating a paper by mathematician Luigi Menabrea from French into English. While translating the paper, she added thousands of words, which she titled "Notes." Note G was a computer program for using Babbage's analytic computer to calculate Bernoulli numbers. This note is considered the first computer program.

In her "Notes," she also speculated that people could use Babbage's analytic computer to calculate more than just numbers. For instance, she suggested that it could recommend musical notes. Workers only constructed a tiny piece of the computer, but experts believe her program would have run successfully.

Herman Hollerith's Punch Card Innovation Transforms 1880 U.S. Census Calculation and Shapes Modern Computing

The quickly expanding population in the United States was problematic for the U.S. census in 1880. For example, participants completing the census in 1850 chose between 60 different statistical entries regarding race and sex, but the 1880 census gave people 1,600 choices. Despite being more complex, government officials needed the information quickly.

Working for the census department, Herman Hollerith devised an idea based on Joseph Marie Jacquard's textile loom, suggesting he could use a computer to find patterns in the census data. He created a system where people punched holes into paper strips to represent the data. The strips were fed into a special device by hand. Then, the machine pushed metal pins into contact with the paper strip, with the pins contacting a bottle of mercury. Where the holes were, it created an electric circuit, making it easy to calculate the results. Later, the computer's design was changed so that the paper strips replaced the punch cards for better durability.

Vannevar Bush Unveils Memex, the World's First Large-Scale Automatic General-Purpose Mechanical Analogue Computer

Vannevar Bush started designing a computer that was a Memex-like device in the 1930s. While he had designed machines before that were analyzer computers that could examine data in detail, his new idea was revolutionary because it took data and connected it in ways similar to how the human mind works. The computer designed by Bush would access information stored on microfilm by association, not numerical indexing, which was how large computers already worked. His idea was to create a desk with a keyboard on the right side. Users who wanted to access specific information used an electrically powered optical recognition system.

In his article "As We May Think," Bush pictured the machine as a piece of furniture while its mechanism could learn about the user's behavior and work on problems when the user was unavailable. While he never constructed Memex, inventors used many of its ideas in future projects.

Alan Turing Envisions a Universal Computer

Starting around 1936, Alan Turing proved that there was no universal way to create a computer program that could undecidedly determine whether any mathematical problem could be solved. During his research, he made the Turing machine, a mathematical model where a computer follows a series of steps with a finite number of options available with each step. The hypothetical Turing machine contains an endlessly expandable tape, a tape head capable of carrying out instructions, and a control mechanism with rules the computer must follow. The tape includes squares that the tape head can change. By following the steps and changing information on the tape as needed, the computer could arrive at an answer to a mathematical query. While Turing's universal computer was never constructed, it is seen as the first theoretical computer to contain an input/output device, memory and a central processing unit.

In 1939, Hewlett-Packard was Founded in a garage in Palo Alto, California

After graduating from Stanford University with degrees in electrical engineering, Bill Hewlett and David Packard founded Hewlett-Packard in 1939 in a one-car garage in Palo Alto, California. The company's first product line included an electronic test instrument to generate audio frequencies. Hewlett-Packard called the computer the HP200. The new company priced this product lower than its competitors, and one of its first customers was Walt Disney. After World War II erupted, the company started making products to disrupt the effectiveness of the enemy's radar systems and fuses for artillery shells.

After the war, the company turned to making oscilloscopes, signal generators, and frequency counters, which it continued to make into the 1960s. They also worked on a line of semiconductors in conjunction with the Japanese government. Hewlett-Packard introduced its HP 2116A in 1966. Engineers designed the computer to allow customers to customize the company's test instruments.

Konrad Zuse Unveils the Z3, the World's First Programmable Computer

Konrad Zuse built his first computer in 1936 and 1937 in his parents' living room. While revolutionary in that it operated on a series of on-and-off switches, called a binary system, the 6-foot-tall computer would only work for a few minutes before the switches would get stuck.

In 1941, Zuse introduced his Z3 computer. The computer used tape to operate and was essentially a Turing machine. It performed statistical analyses of wing-flutter for the German Aircraft Research Institute. The computer, which did not survive World War II, consisted of a cluster of glass-fronted wooden cabinets and wiring looms. A series of vacuum tubes allowed the computer to switch between two stable states. Since the computer relied on tape to operate, it was easy for operators to switch between different programs.

J.V. Atanasoff and Clifford Berry Create the ABC Computer

After finishing his doctorate, J.V. Atanasoff moved to Ames, Iowa, to become an associate professor at Iowa State University. He had encountered many challenging math problems while working on his degree and thought there had to be a better way to solve these problems faster and more efficiently. He determined that all computers at the time depended on the operator's skills.

Iowa State University gave the professor $650 to build a prototype computer, and he hired Clifford Berry, a graduate mechanical engineering student, to assist him. The computer they developed, called the ABC or Atanasoff-Berry computer, looked like a desk and weighed about 700 pounds. It was operated using 300 vacuum tubes and could complete one linear equation every 15 seconds. This computer was the first to have an arithmetic logic unit, which is standard for modern computers. The university dismantled it during World War II despite its place in computer history.

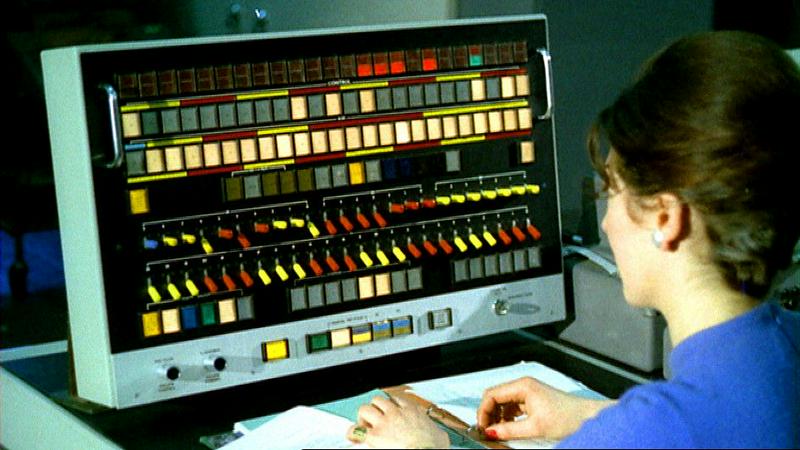

Meet UNIVAC I – The World's First Commercial Computer

The U.S. Census Bureau hired J. Presper Eckert and John Mauchly who had previously built the Electrical Numerical Integrator and Calculator to lead a team to develop a commercial computer. The men sold their company to Remington Rand who made the UNIVAC 1, which weighed 16,000 pounds. It was 14 feet long, 7.5 feet wide and 8 feet high.

It used 5,000 vacuum tubes and could perform 1,000 calculations per second. In announcing the computer, Allen N. Scares, vice president and general manager of Remington Rand, said the computer could "compute a complicated payroll for 10,000 employees in only 40 minutes." While declaring the computer "an 8-foot mathematical genius," the New York Times said that the computer could "classify an average citizen as to sex marital status, education, residence, age group, birthplace, employment, income and a dozen other classifications" in one-sixth of a second. In addition, the computer correctly predicted President Eisenhower would win the 1953 presidential election.

Charting the Course of Computing History With the Electronic Delay Storage Automatic Calculator

Maurice Wilkes and his University of Cambridge Mathematical Laboratory team constructed the electronic delay storage automatic calculator. The team started working on the project in 1947 and ran its first program on May 6, 1949. The computer, which operated on mercury delay lines for memory and derated vacuum tubes for logic, was used until 1958. The computer's first purpose was to assist radar operators.

In order to use the computer, the user used a five-hole punch tape. Initially, computer programs were limited to about 800 words but expanded to 1,024 words. This computer was the first to have stored memory. It was also the first to have a game installed, a version of Tic Tac Toe called XOX.

The U.S. Catches Up by Developing the Standards Eastern Automatic Computer

The Standards Eastern Automatic Computer, also called the Standards Electronic Automatic Computer and the National Bureau of Standards Interim Computer, was constructed by the U.S. National Bureau of Standards in 1950. Initially, the computer used 747 vacuum tubes, which researchers expanded to 1,500 tubes for amplification, inversion, and information storage on dynamic flip-flops. This 3,000-pound computer was the first to do logic, mostly with solid-state devices, and had 10,500 germanium diodes, which researchers eventually expanded to 16,000 diodes. Harry Huskey led the team that constructed this computer that could run programs taking over 100 hours to complete. It was one of the most reliable computers at the time of its construction.

The Standards Eastern Automatic Computer was the fastest computer available at the time. It could solve addition problems in about 864 microseconds and multiplication problems in about 2,980 microseconds.

Meet the Architect Behind COBOL, Grace Hopper

After graduating from Yale University with a Doctorate in Mathematics, Grace Hopper gave up a teaching career at Vassar College to join the U.S. Navy. She worked on developing the Mark I computer at Harvard University and wrote a 500-page operator's manual for the Automatic Sequence-Controlled Calculator. After World War II, she became a research fellow at Harvard University before joining Eckert-Mauchly Corporation and assisting with developing the universal automatic computer.

By the mid-1950s, businesses faced a problem applying computers to their companies. They were spending about $800,000 on programming costs and $500,000 on hardware to run them. A group convinced the United States Department of Defense to tackle the problem. Grace Hopper and others solved the problem by writing Common Business-Oriented Language (COBOL), allowing computers to understand words and numbers. She based COBOL on FLOW-MATIC language, which she developed first. While being modified a few times, it became the standard program all computers use.

John Backus and Team Forge the First High-Level Programming Language, FORTRAN

According to John Backus, before his team developed FORTRAN, a computer programmer "had to employ every trick that he could think of to make the program run fast enough to justify the rather enormous cost of running it. And he had to do all of that by his own ingenuity because the only information he really had was the problem at hand, and the machine manual." He also pointed out that, sometimes, there was not even a manual. In addition, many computer programmers considered the information they had amassed highly guarded secrets that they would not share willingly.

In 1957, John Backus was working for IBM when he proposed to his superiors that the company develop a more practical alternative to assembly language that told computers what you wanted them to do. The development of FORTRAN eliminated the need to hand-code computer programs, helping to reduce startup costs. The program became widely accepted and was considered the standard for many decades.

Jack Kilby and Robert Noyce Change the Computer Landscape With Integrated Circuits

Before Jack Kilby and Robert Noyce developed their integrated circuits, the components for each computer manually, and the process could be very tedious and uneconomical. In addition, one faulty part could render the whole system unusable. Jack Kilby joined Texas Instruments in 1958 and began working on their Micro-Module program sponsored by the U.S. Army Signal Corps. These modules were standardized sizes, and workers could snap them together, but they did not reduce the number of components. Therefore, he hatched a successful idea to solve the problem by creating an integrated circuit.

Working independently, Robert Noyce came up with a similar integrated circuit at about the same time. Noyce's invention was more of a commercial success. Yet, the two men decided to share credit for the invention because they believed there was enough credit to go around.

The Atlas Computer Becomes the World's Most Advanced Supercomputer

Tom Kilburn and his team working at the University of Manchester with support from Ferranti Ltd built the Atlas computer, the fastest computer at the time. However, some argue that the fastest one was IBM's Stretch. The Atlas computer, which Kilburn designed to run nuclear physics calculations, used 60,000 transistors, 300,000 diodes and 40 circuit boards.

This computer, completed in 1962, was the first to have virtual memory, allowing people to use it to work on multiple projects simultaneously. The computer had a two-level storage area and would automatically move data from one level to another, which had previously been done by the operator, resulting in great time-saving. While moving more critical information into the computer's main storage area, it would also determine the least used page in the main storage area and move it to the computer's secondary storage area.

Unveiling Douglas Engelbart's Mouse

When Douglas Engelbart opened the Augmentation Research Center at Stanford Research Institute in 1957, he assembled a team to make several essential computer changes using the bootstrap technique. While the team received many patents, one of the first was for a computer mouse. The mouse consisted of a wooden box with rotating wheels inside, which displayed a cursor, which they called a bug, on the user's screen. The team named it a mouse because the wire connecting the box to the computer looked like a mouse's tail.

Engelbart used the mouse in a public presentation at the Association for Computing Machinery / Institute of Electrical and Electronics Engineers Computer Society's Fall Joint Computer Conference in San Francisco on December 9, 1968, during which he used it to resize windows, highlight text and move the cursor around the screen. The public presentation was one of the first remote conference calls in the world as people in three different locations participated in the demonstration.

Dennis Ritchie and Ken Thompson Revolutionize the Computer Industry With UNIX

The C Programming Language was created at Bell Laboratory by Dennis Ritchie and Ken Thompson to simplify UNIX computer development. While most computers used in 1969, when the men started working, were batch processing, UNIX became unique because it used a multi-tasking, multi-user operating system. C Programming Language made it easy to adapt the program across multiple platforms, often without making any changes.

The development of UNIX led to UNIX Philosophy. While people have summarized it in many ways, there are four basic tenets at its heart. The first is that each module should do a specific task and second is to keep things simple. The third idea is to provide clarity, which makes it easy for developers to work together. The last tenet is to design tools that can be combined in various ways to achieve the desired outcome.

The World's First Dynamic Random Access Memory Computer, the Intel 1103, Transforms the Industry

In 1969, William Regitz of Honeywell started contacting companies looking for someone to share in developing a dynamic memory circuit containing a three-transistor cell that workers had developed. After being turned down by several companies, Intel became excited about the project and assigned Joel Karp to work with Regitz.

After developing the 1X, 2Y cell and creating the Intel 1102, Intel officials decided that a 2X, 2Y cell would work better, leading to the release of the 1103 in October 1970. This allowed manufacturers to move away from bulky magnetic-core random access memory and to refine previous transistor-based memory cell designs. R.H. Dennard, who designed the one-cell transmitter, said the development of this cell "allowed RAM to become very dense and inexpensive. As a result, mainframe computers could be equipped with relatively fast RAM to act as a buffer for the increasing amount of data stored on disk drives. This vastly sped up the process of accessing and using stored information."

Alan Shugart and His Team at IBM Invent the Floppy Disk

In 1971, Alan Shugart and a team at IBM invented the memory disk, which later became the floppy disk. A disk drive in a computer grabbed the floppy disk and spun it like a record as the machine read the information on its magnetic iron oxide middle, where users could store data on one or both sides. The original floppy disk was an 8-inch square that could hold 100 KBs of data. Researchers used it to move data between one computer and an IBM 3330, which they used as a storage device.

The team soon decided that the floppy disks were too big. One night, team members Jim Adkisson and Don Massaro were discussing the idea with their boss Ag Wang of Wang Laboratories while getting a drink. Ag pointed at the cocktail napkin and said about that size. Soon, the team created a 5.25-inch floppy disk that could hold 1.2MB of data.

Xerox Engineer Robert Metcalfe Creates Ethernet

In 1973 and 1974, researchers working at Xerox Palo Alto Research Center developed ethernet to let two Alto computers communicate with each other. Robert Metcalfe, who had earned his doctorate while working on his doctorate at the University of Hawaii while working on ALOHAnet, led the team. The ALOHAnet allowed two computers to communicate with each other using radio frequencies. Xerox wanted the system because they were developing a laser printer and wanted all computers at their Palo Alto, California, facility to communicate with it.

The initial system had problems when more than one person tried to send data simultaneously. The bits of data would collide and never reach their destination. In order to solve this problem, researchers gave computers random times to send data so that it would reach its destination. Ethernet became standardized after the Local and Metropolitan Area Networks Committee published their standards in 1980.

The Race to Introduce Personal Computers

By the early 1970s, many people were familiar with mainframe and time-share computers, but they wanted a computer that they could use personally. After the invention of microprocessors, that became possible. Many companies introduced personal computers to the market, which hobbyists and computer technicians could utilize for their interests.

The first personal computers, like the Altair 8800, came in kits that the end user had to assemble. One of the first computers was the SCELBI, which contained five basic circuit boards and provided memory expansion to 16 kB. Jonathan Titus, who designed the Mark 8 computer, took to "Radio-Electronics" magazine to tell people how to build a personal computer while offering them $50 circuit board sets to complete their project. Soon, people could buy personal computers that weighed about 55 pounds preassembled, like the IBM 5100 and Radio Shack's TRS-8.

In 1975, Bill Gates and Paul Allen Founded a Little Company Called Microsoft in Albuquerque, New Mexico

Bill Gates and Paul Allen founded Microsoft, called Micro-Soft, initially, in 1974 in Albuquerque, New Mexico. To do this, Bill Gates left Harvard University, while Paul Allen quit his job as a computer technician. They started their business in Albuquerque because it was home to MITS, maker of the Altair 8800. Microsoft found early success with its MS-DOS operating system, which it licensed to various companies. The system was the most prevalent throughout the 1980s. Unlike computers with a graphics user interface, users on computers with the MS-DOS operating system could manipulate files on their devices using a command line.

In 1986, Gates moved Microsoft to Redmond, Washington. The company went public that year, and the first shares sold for $21, which raised $61 million.

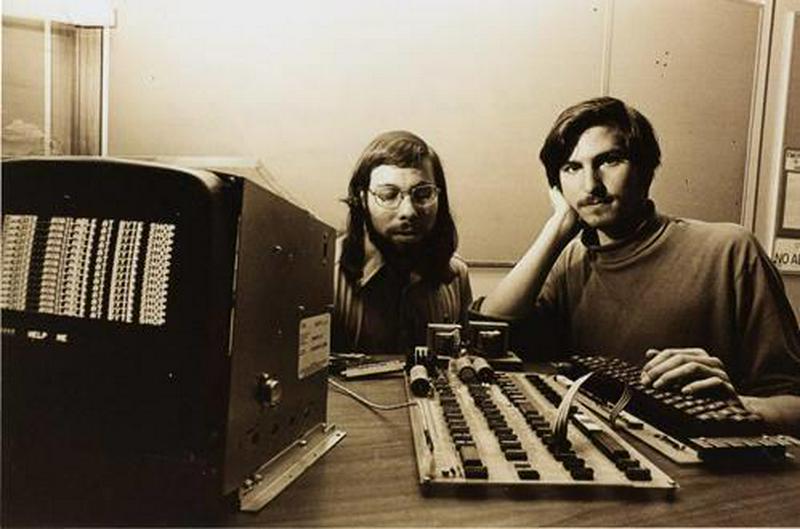

In 1976 Steve Jobs and Steve Wozniak Introduce Apple I to the World

Steve Wozniak designed the Apple I computer and, along with his friend Steve Jobs, founded Apple to sell it. The computer was unique because it contained video display terminal circuitry and a keyboard interface on a single board, which allowed users to see visual displays on a composite video monitor instead of an expensive computer terminal. Wozniak debuted the computer at the Homebrew Computer Club in Palo Alto, California, in July 1976. Still, the pair had to wait until they had 50 orders to order the parts on credit and start producing them.

Wozniak started building the prototype before he could afford its central processing unit, which cost about $175. He completed the project after MOS Technology released a $25 processor.

Tim Berners-Lee Creates HyperText Markup Language and Introduces People to the World Wide Web

Tim Berners-Lee created HyperText Markup Language (HTTP) between 1988 and 1991 while working as a contractor at Tim Berners-Lee at the European Organization for Nuclear Research in Geneva, Switzerland. He wanted to give his colleagues an easier way to cross-reference their research. This system, still used by the internet, worked well because of its simplicity, but it failed to impress the supervisors at the nuclear research center.

While developing HTTP, Berners-Lee also created the idea of pages having Universal Resource Identifier addresses, effectively creating the World Wide Web. He also made the first web server to hold pages while allowing others to access them.

Microsoft Releases Windows 95 and People Instantly Love It

Microsoft's Windows 95 was the first system to combine MS-DOS and Microsoft Windows products. It was the first operating platform to feature a start button and the first to feature task-switching features. A Microsoft internet option was included, making it easier for people to get information from the internet. Its 32-bit architecture meant the system was more stable than previous options and people could use the computer to do multiple tasks simultaneously.

The company introduced the system to the public using The Rolling Stones' 1981 single "Start Me Up," so the commercial instantly drew viewers' attention. The company also spent thousands creating hype around the product, including lighting the Empire State Building up in Microsoft's colors, so people did not want to miss out.

Apple Creates an All-in-one Macintosh desktop computer Called the iMac G3

In 1996, Apple, financially strapped, put lots of resources into the iMac G3, the first line of Macintosh desktop computers, and the public loved it. This computer was the first major release for the company since Steve Jobs returned to the company he founded.

This computer is the only Mac with an egg shape and was available in seven colors. Many people, new to computers in their homes, were sold on how easy this computer was to install, and they could easily find a space where they could picture it fitting beautifully. Most computers at the time had a separate monitor and tower, but people loved that the iMac G3 had both in one unit because it created a less cluttered look. The company carefully positioned the computer as internet-ready when more consumers than ever were looking to get online.

Steve Jobs Revolutionizes How People Access the Internet With the First iPhone

Steve Jobs revealed the first iPhone in 2007. He sold the public on having one device that allowed them to make phone calls, listen to their favorite tunes and use the internet. It was the first phone that people could use without a stylus.

Researchers completed the top-secret project, Project Purple 2, to develop the iPhone over 30 months in conjunction with Cingular Wireless, which is now part of AT&T. The company worked until the last minute to prepare this phone for public release. For instance, glass replaced the plastic screen only six weeks before its release because the screen on Jobs' phone got scratched by his keys.

University of Maryland Team Builds First Reprogrammable Quantum Computer

Quantum computers are faster than standard computers, but until 2016, researchers had trouble controlling them because of the unique physics required. A team working at the University of Maryland built a quantum computer with a processor containing five qubits used to store information surrounded by ions of electricity in a magnetic field that is fully reprogrammable.

Researchers will be able to reprogram the quantum computer using lasers. Staff at the university and its partner IonQ say that they hope to make the system bigger than five qubits and make it easier to reprogram, but their project released in 2020 is a vital first step for people working in many fields, including biology, medicine, climate science and materials development.

No comments: