OpenAI chief Altman described what 'scary' AI means to him, but ChatGPT has its own examples

OpenAI CEO testified before Senate subcommittee to hash out potential avenues to regulate AI

OpenAI CEO Sam Altman testified before Congress in Washington, D.C., this week about regulating artificial intelligence as well as his personal fears over the tech and what "scary" AI systems means to him.

Fox News Digital asked OpenAI’s wildly popular chatbot, ChatGPT, to also weigh in on examples of "scary" artificial intelligence systems, and it reported six hypothetical instances of how AI could become weaponized or have potentially harmful impacts on society.

When asked by Fox News Digital on Tuesday after his testimony before a Senate Judiciary subcommittee, Altman gave examples of "scary AI" that included systems that could design "novel biological pathogens."

"An AI that could hack into computer systems," he continued. "I think these are all scary. These systems can become quite powerful, which is why I was happy to be here today and why I think this is so important."

OPENAI CEO SAM ALTMAN REVEALS WHAT HE THINKS IS 'SCARY' ABOUT AI

OpenAI CEO Sam Altman speaks with the press after his testimony before a Senate Judiciary subcommittee on May 16, 2023. (Fox News)

Fears have spread among some tech experts and leaders, as well as members of the public, that artificial intelligence could grow so knowledgeable and powerful that it could wipe out society.

The "godfather of artificial intelligence," computer scientist Geoffrey Hinton, for example, warned last month that "it's not inconceivable" AI could wipe "out humanity." His remarks came after he quit his job at Google, saying he regrets his life’s work due to how AI can be misused.

Advanced autonomous weapon system

When asked for examples of "scary AI," ChatGPT responded, "These [autonomous weapon systems], often referred to as ‘killer robots’ or ‘lethal autonomous weapons,’ raise ethical concerns and the potential for misuse or unintended consequences." (Costfoto / Future Publishing via Getty Images)

Following Altman’s comments on scary AI and other tech leaders sounding the alarm on misused AI, Fox News Digital asked ChatGPT on Wednesday to provide an example of "scary AI."

"An example of ‘scary AI’ is an advanced autonomous weapon system that can independently identify and attack targets without human intervention," the chatbot responded. "These systems, often referred to as ‘killer robots’ or ‘lethal autonomous weapons,’ raise ethical concerns and the potential for misuse or unintended consequences."

ChatGPT said to "imagine a scenario where such an AI-powered weapon system is programmed with a set of criteria to identify and eliminate potential threats." If such a system malfunctioned or wound up in the hands of bad actors, "it could lead to indiscriminate targeting, causing widespread destruction and loss of life."

The chatbot added this was just one example of "how AI, if not properly controlled or guided by human values, can have potentially alarming implications."

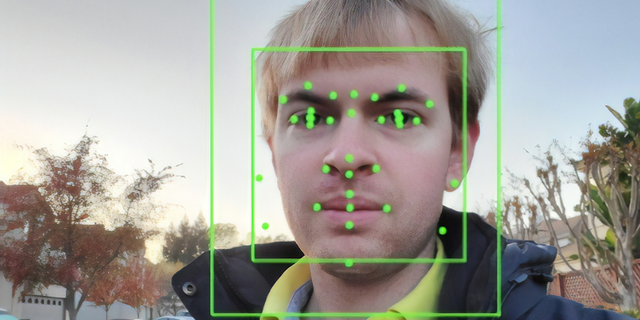

Deepfakes

Fears have spread among some tech experts and leaders, as well as members of the public, that artificial intelligence could grow so knowledgeable and powerful that it could wipe out society. (Reuters / Dado Ruvic / Illustration)

Fox News Digital asked the chatbot to provide additional examples of "scary AI," and the second example provided was "deepfakes," which often involve editing videos or photos of people to make them look like someone else by using deep-learning AI.

"This technology can be misused for spreading disinformation, blackmail or manipulating public opinion. Deepfakes have the potential to undermine trust, create social unrest and damage reputations," ChatGPT said.

Deepfakes hit the public’s radar in 2017 after a Reddit user posted realistic-looking pornography of celebrities to the platform. Deepfakes have since become more widely used and convincing, and they have led to phony videos, such as comedian Jerry Seinfeld starring in the classic 1994 crime drama "Pulp Fiction" or rapper Snoop Dogg appearing in a fake infomercial promoting tarot card readings.

CRITICS SAY AI CAN THREATEN HUMANITY, BUT CHATGPT HAS ITS OWN DOOMSDAY PREDICTIONS

AI-powered surveillance

ChatGPT also listed "AI-powered surveillance" systems used by bad actors, such as "authoritarian regimes," as an example of "scary AI." (Smith Collection / Gado / Getty Images / File)

The chatbot next listed "AI-powered surveillance" systems used by bad actors, such as "authoritarian regimes," as an example of "scary AI."

"The use of AI in surveillance systems can raise concerns about privacy and civil liberties," the chatbot said. "Advanced facial recognition algorithms combined with surveillance cameras can enable mass tracking and identification of individuals, leading to potential abuses by authoritarian regimes or invasive monitoring of citizens."

In the U.S., some police departments and private businesses have employed AI-powered facial recognition systems in an effort to curb crime. The use of the tech has come with some notable failures, including a handful of lawsuits from people who said they were fraudulently arrested when the systems misidentified a suspect in a crime case.

In the U.S., some police departments and private businesses have employed AI-powered facial recognition systems in an effort to curb crime. The use of the tech has come with some notable failures, including a handful of lawsuits from people who said they were fraudulently arrested when the systems misidentified a suspect in a crime case.Social engineering bots

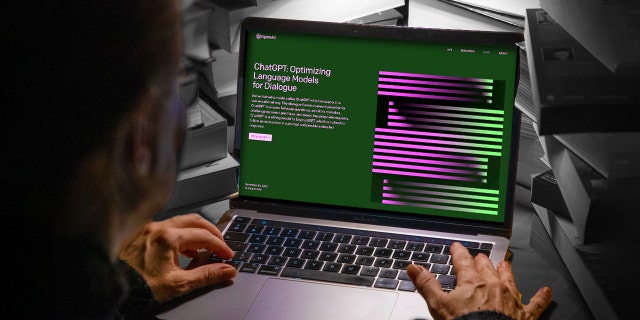

Artificial Intelligence is facing a debate over regulation. (iStock)

ChatGPT said chatbots themselves pose a threat to society and individuals, arguing that "chatbots and social engineering algorithms" can be trained to "manipulate human behavior and exploit vulnerabilities."

"These bots can be used for phishing attacks, spreading propaganda or influencing public opinion through social media platforms, amplifying the potential for misinformation and societal division," the chatbot said.

When asked to elaborate on the threats chatbots could pose, ChatGPT said that such technology has the ability to mimic human conversations and "convincingly interact with individuals." Chatbots could then "exploit psychological vulnerabilities" of humans by analyzing their responses, potentially leading to humans divulging sensitive personal information or buying into false information from the bots, or the systems could manipulate human "emotions for malicious purposes."

OPENAI CEO SAM ALTMAN INVITES FEDERAL REGULATION ON ARTIFICIAL INTELLIGENCE

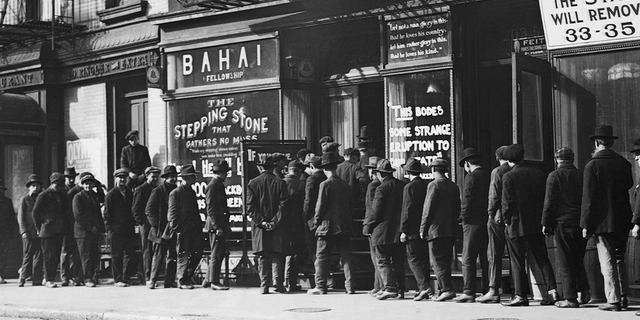

Unemployment and economic disparity

Unemployed men line up for coffee and bread at a soup kitchen in New York City circa 1930. (FPG / Hulton Archive / Getty Images)

ChatGPT argued that as artificial intelligence systems become more powerful, they could take over jobs typically occupied by humans, which could widen "economic inequality."

"There are concerns about job displacement and widening economic inequality," ChatGPT said. "AI-driven systems and robots replacing human workers in various industries could lead to significant job losses and social disruptions if not properly managed or accompanied by measures to retrain and reskill the workforce."

A report from Goldman Sachs earlier this year found that generative AI could replace and affect 300 million jobs around the world. Another study from outplacement and executive coaching firm Challenger, Gray & Christmas found that ChatGPT could replace at least 4.8 million American jobs.

The reports have caused some anxiety among workers, including in fields most likely affected by the technology, such as customer service representatives, but proponents of the technology say the proliferation of AI could lead to the development of new jobs and a strengthened economy.

OPENAI CEO SAM ALTMAN ADMITS HIS BIGGEST FEAR FOR AI: ‘IT CAN GO QUITE WRONG’

AI bias and discrimination

ChatGPT said AI systems can perpetuate bias and discrimination based on how it is trained, which could amplify "existing societal inequalities."

"AI systems can inadvertently perpetuate biases present in the data they are trained on, leading to discriminatory outcomes," ChatGPT said. "For example, biased algorithms in hiring processes or predictive policing systems could perpetuate systemic biases and unfair treatment of certain groups, amplifying existing societal inequalities."

ChatGPT can sometimes "hallucinate," meaning it can respond to a query with an answer that sounds correct but is actually made up. Concerns over hallucinations and political bias in AI systems has intensified in recent months, with tech giant Elon Musk telling Fox News last month that Silicon Valley programmers "are training the AI to lie."

WHO IS SAM ALTMAN? THE TECH LEADER BEHIND ARTIFICIAL INTELLIGENCE LAB OPENAI

ChatGPT can sometimes "hallucinate," meaning it can respond to a query with an answer that sounds correct but is actually made up. (Getty Images / File)

ChatGPT highlighted in its responses about "scary AI" that though its examples shine a light on the "potential negative implications of AI, they also reflect the importance of responsible development, regulation and ethical considerations to mitigate such risks and ensure the beneficial and safe use of AI technologies."

OPENAI CEO SAM ALTMAN FACES SENATE PANEL AS PRESSURE BUILDS TO REGULATE AI

Altman said during the Senate hearing Tuesday that his greatest fear as OpenAI develops artificial intelligence is that it causes major harmful disruptions for people.

OpenAI CEO Sam Altman takes his seat before the start of the Senate Judiciary subcommittee hearing on AI, Tuesday, May 16, 2023. (Bill Clark / CQ-Roll Call Inc. via Getty Images)

"My worst fears are that we cause significant – we, the field, the technology industry – cause significant harm to the world," Altman said. "I think that could happen in a lot of different ways. It’s why we started the company."

"I think if this technology goes wrong, it can go quite wrong, and we want to be vocal about that," he added. "We want to work with the government to prevent that from happening."

Altman said during the hearing that he invites the opportunity to work with U.S. lawmakers on crafting regulations for AI that would help prevent unwanted outcomes with the tech.

No comments: