Fears of AI hitting black market stir concerns of criminals evading government regulations: Expert

Dr. Harvey Castro, a speaker on artificial intelligence, worries about AI falling into criminal hands

Artificial intelligence – specifically large language models like ChatGPT – can theoretically give criminals information needed to cover their tracks before and after a crime, then erase that evidence, an expert warns.

Large language models, or LLMs, make up a segment of AI technology that uses algorithms that can recognize, summarize, translate, predict and generate text and other content based on knowledge gained from massive datasets.

ChatGPT is the most well known LLM, and its successful, rapid development has created unease among some experts and sparked a Senate hearing to hear from Sam Altman, the CEO of ChatGPT maker OpenAI, who pushed for oversight.

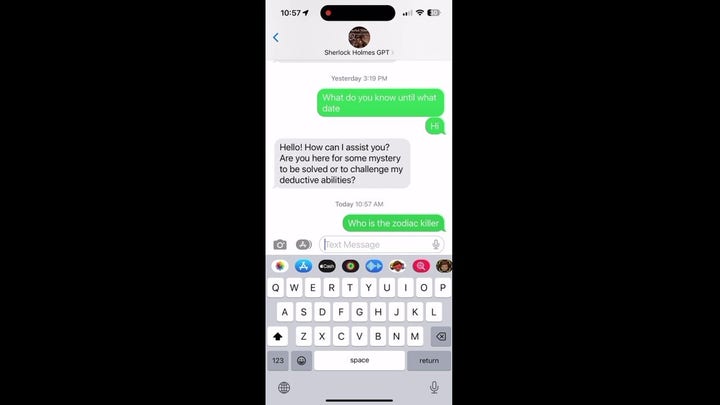

Corporations like Google and Microsoft are developing AI at a fast pace. But when it comes to crime, that's not what scares Dr. Harvey Castro, a board-certified emergency medicine physician and national speaker on artificial intelligence who created his own LLM called "Sherlock."

Corporations like Google and Microsoft are developing AI at a fast pace. But when it comes to crime, that's not what scares Dr. Harvey Castro, a board-certified emergency medicine physician and national speaker on artificial intelligence who created his own LLM called "Sherlock."WORLD'S FIRST AI UNIVERSITY PRESIDENT SAYS TECH WILL DISRUPT EDUCATION TENETS, CREATE ‘RENAISSANCE SCHOLARS’

Samuel Altman, CEO of OpenAI, testifies before the Senate Judiciary Subcommittee on Privacy, Technology, and the Law May 16, 2023 in Washington, D.C. The committee held an oversight hearing to examine AI, focusing on rules for artificial intelligence. ((Photo by Win McNamee/Getty Images))

It's the "the unscrupulous 18-year-old" who can create their own LLM without the guardrails and protections and sell it to potential criminals, he said.

"One of my biggest worries is not actually the big guys, like Microsoft or Google or OpenAI ChatGPT," Castro said. "I'm actually not very worried about them, because I feel like they're self-regulating, and the government's watching and the world is watching and everybody's going to regulate them.

"I'm actually more worried about those teenagers or someone that's just out there, that's able to create their own large language model on their own that won't adhere to the regulations, and they can even sell it on the black market. I'm really worried about that as a possibility in the future."

WHAT IS AI?

On April 25, OpenAI.com said the latest ChatGPT model will have the ability to turn off chat history.

"When chat history is disabled, we will retain new conversations for 30 days and review them only when needed to monitor for abuse, before permanently deleting," OpenAI.com said in its announcement.

WATCH DR. HARVEY CASTRO EXPLAIN AND DEMONSTRATE HIS LLM "SHERLOCK"

The ability to use that type of technology, with chat history disabled, could prove beneficial to criminals and problematic for investigators, Castro warned. To put the concept into real-world scenarios, take two ongoing criminal cases in Idaho and Massachusetts.

OPENAI CHIEF ALTMAN DESCRIBED WHAT ‘SCARY’ AI MEANS TO HIM, BUT CHATGPT HAS ITS OWN EXAMPLES

Bryan Kohberger was pursuing a Ph.D. in criminology when he allegedly killed four University of Idaho undergrads in November 2022. Friends and acquaintances have described him as a "genius" and "really intelligent" in previous interviews with Fox News Digital.

In Massachusetts there's the case of Brian Walshe, who allegedly killed his wife, Ana Walshe, in January and disposed of her body. The murder case against him is built on circumstantial evidence, including a laundry list of alleged Google searches, such as how to dispose of a body.

BRYAN KOHBERGER INDICTED IN IDAHO STUDENT MURDERS

Castro's fear is someone with more expertise than Kohberger could create an AI chat and erase search history that could include vital pieces of evidence in a case like the one against Walshe.

"Typically, people can get caught using Google in their history," Castro said. "But if someone created their own LLM and allowed the user to ask questions while telling it not to keep history of any of this, while they can get information on how to kill a person and how to dispose of body."

Right now, ChatGPT refuses to answer those types of questions. It blocks "certain types of unsafe content" and does not answer "inappropriate requests," according to OpenAI.

WHAT IS THE HISTORY OF AI?

Dr. Harvey Castro, a board-certified emergency medicine physician and national speaker on artificial intelligence who created his own LLM called "Sherlock," talks to Fox News Digital about potential criminal uses of AI. (Chris Eberhart)

During last week's Senate testimony, Altman told lawmakers that GPT-4, the latest model, will refuse harmful requests such as violent content, content about self-harm and adult content.

"Not that we think adult content is inherently harmful, but there are things that could be associated with that that we cannot reliably enough differentiate. So we refuse all of it," said Altman, who also discussed other safeguards such as age restrictions.

"I would create a set of safety standards focused on what you said in your third hypothesis as the dangerous capability evaluations," Altman said in response to a senator's questions about what rules should be implemented.

AI TOOLS BEING USED BY POLICE WHO ‘DO NOT UNDERSTAND HOW THESE TECHNOLOGIES WORK’: STUDY

"One example that we’ve used in the past is looking to see if a model can self-replicate and sell the exfiltrate into the wild. We can give your office a long other list of the things that we think are important there, but specific tests that a model has to pass before it can be deployed into the world.

"And then third I would require independent audits. So not just from the company or the agency, but experts who can say the model is or isn't in compliance with these stated safety thresholds and these percentages of performance on question X or Y."

To put the concepts and theory into perspective, Castro said, "I would guess like 95% of Americans don't know what LLMs are or ChatGPT," and he would prefer it to be that way.

ARTIFICIAL INTELLIGENCE: FREQUENTLY ASKED QUESTIONS ABOUT AI

Artificial Intelligence is hacking datas in the near future. (iStock)

But there is a possibility Castro's theory could become reality in the not-so-distant future.

He alluded to a now-terminated AI research project by Stanford University, which was nicknamed "Alpaca."

A group of computer scientists created a product that cost less than $600 to build that had "very similar performance" to OpenAI’s GPT-3.5 model, according to the university's initial announcement, and was running on Raspberry Pi computers and a Pixel 6 smartphone.

A group of computer scientists created a product that cost less than $600 to build that had "very similar performance" to OpenAI’s GPT-3.5 model, according to the university's initial announcement, and was running on Raspberry Pi computers and a Pixel 6 smartphone.WHAT ARE THE DANGERS OF AI? FIND OUT WHY PEOPLE ARE AFRAID OF ARTIFICIAL INTELLIGENCE

Despite its success, researchers terminated the project, citing licensing and safety concerns. The product wasn't "designed with adequate safety measures," the researchers said in a press release.

"We emphasize that Alpaca is intended only for academic research and any commercial use is prohibited," according to the researchers. "There are three factors in this decision: First, Alpaca is based on LLaMA, which has a non-commercial license, so we necessarily inherit this decision

The researchers went on to say the instruction data is based on OpenAI’s text-davinci-003, "whose terms of use prohibit developing models that compete with OpenAI. Finally, we have not designed adequate safety measures, so Alpaca is not ready to be deployed for general use."

But Stanford's successful creation strikes fear in Castro's otherwise glass-half-full view of how OpenAI and LLMs can potentially change humanity. "I tend to be a positive thinker," Castro said, "and I'm thinking all this will be done for good. And I'm hoping that big corporations are going to put their own guardrails in place and self-regulate themselves."

No comments: