AI might have prevented Boston Marathon bombing, but with risks: former police commissioner

Ed Davis, Boston police commissioner during 2013 bombing, says AI 'also available to criminals and terrorists'

Rapidly developing artificial intelligence technology may have prevented the Boston Marathon bombing, but it might also become law enforcement's newest nightmare.

That was the message from Ed Davis, who was Boston's police commissioner during the deadly terrorist attack on April 15, 2013. A decade after that plot that killed three people and injured hundreds, he told Fox News Digital that AI "will ultimately improve investigations and allow many dangerous criminals to be brought to justice."

"Use of artificial intelligence systems applied to secret and top-secret databases could very well have prevented the Boston Marathon bombing," he said.

"However, it may be years before this happens. But right now, the government needs to be aware of the downsides and mitigate the risks of AI."

BOSTON MARATHON SURVIVORS REFLECT, SHARE OUTRAGE OVER CONVICTED BOMBER COLLECTING COVID-19 FUNDS

Medical workers help injured people after bombs exploded near the finish line of the Boston Marathon on April 15, 2013. (AP)

Davis, who has since retired and founded a security consulting firm, testified at last week's Senate subcommittee hearing on emerging national security threats about how new technology, particularly AI, has changed policing in the last 10 years.

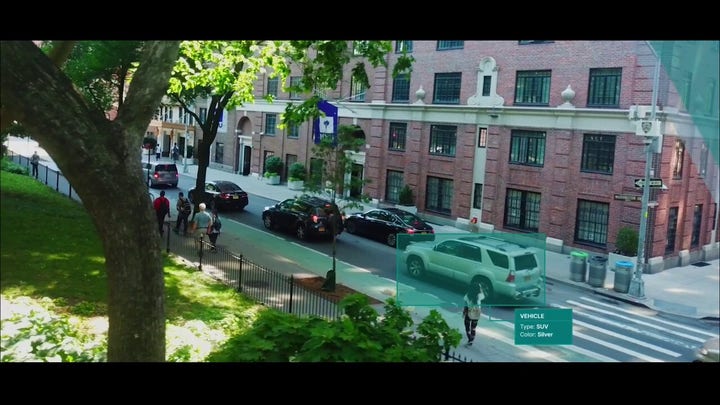

"One of the most significant advantages of new AI-driven imaging devices is in the ability to transform traditional video surveillance systems into real-time data sources and proactive investigative tools," Davis said during the hearing.

SOUTH CAROLINA PRIEST SAYS THERE'S ‘NO PLACE’ FOR AI AFTER ASIA CATHOLIC CHURCH USES IT FOR SYNODAL DOCUMENT

"Today’s cameras and coordinated systems have the potential to provide analytics in real time, identify possibly dangerous items, as well as react and pivot based on crowd dynamics such as abnormal movement patterns or gathering."

The seeds to create a proactive law enforcement system already exist.

Boston Police Commissioner Ed Davis testifies during the Senate Homeland Security and Governmental Affairs Committee hearing on Capitol Hill, July 10, 2013, to review the lessons learned from the Boston Marathon bombings. (AP Photo / J. Scott Applewhite)

Davis mentioned a couple of companies using AI software that essentially analyzes a trove of video data to anticipate what will likely happen in a crowd.

For example, the video analytic company Genetec uses high-tech cameras and the AI software Vintra that can learn "from normal activity" to notify operators of "approaching threats and of anomalies."

REGULATION COULD ALLOW CHINA TO DOMINATE IN THE AI RACE, EXPERTS WARN: ‘WE WILL LOSE’

Vintra IQ can review live or recorded videos 20 times faster than an average human, ID all individuals a suspect has come in contact with and then rank those interactions to identify the most recurrent relationships, the company says on its website.

The AI software recreates the suspect's "distinct" patterns, matches events to those patterns and finds the "anomalies where known patterns are violated" in real life, according to Vintra's website.

Watch Vintra's demo:

That's what law enforcement agencies did as they hunted down the bombers, but the AI-driven technology "solves manually searching the overwhelming amount of data produced by use of thousands of cameras," Davis said.

In the case of the Boston Marathon bombing, pictures and videos from witnesses were posted all over social media, and armchair detectives concocted all kinds of conspiracies and theories that turned the manhunt into a runaway train.

MEET THE 72-YEAR-OLD CONGRESSMAN GOING BACK TO COLLEGE TO LEARN ABOUT AI

There was an internal debate about releasing the blurry security images of Tamerlan and Dzhokhar Tsarnaev as the primary suspects, which Davis supported.

"I was convinced if we [ran the photos] these guys would be in our pocket within a couple of hours – people know who committed these crimes and will help us," Davis said during an interview for Netflix's recent documentary about the bombing.

Boston Marathon bombers Tamerlan Tsarnaev, left, and Dzhokhar Tsarnaev (The Associated Press / Lowell Sun & Robin Young / File)

The photos were ultimately released but not before they were leaked to the press.

Within 24 hours of the photos' release, Dzhokhar, 19, was identified as one of the bombers, and investigators retraced the teenager's steps, which led them to his older brother, the mastermind behind the plot.

10 WAYS BIG GOVERNMENT USES AI TO CREATE THE TOTALITARIAN SOCIETY OF ORWELL'S CLASSIC ‘1984’

"Since 2013, the government has made significant improvements in the realm of security measures, including cybersecurity, border security and emergency response planning," Davis told lawmakers.

"These improvements include more advanced technologies, more comprehensive planning and increased public education and awareness supported by many private-public relationships and innovative companies."

Joselyn Perez of Methuen, Massachusetts, left, who was at the Boston Marathon in 2013 and survived the bombing, embraces her mother, Sara Valverde Perez, who was hospitalized as a result of the bombing that day, in Boston on April 15, 2021. Joselyn's brother, Yoelin Perez, embraces them as church bells ring to mark the moment that the bombs went off eight years earlier. (Jessica Rinaldi / Boston Globe via Getty Images)

But what happens when the same technology is in the hands of bad guys?

The same data can be used to interfere with an ongoing investigation and send police on a wild goose chase, Davis said.

FLASHBACK: STEPHEN HAWKING WARNED AI COULD MEAN THE 'END OF THE HUMAN RACE' IN YEARS LEADING UP TO HIS DEATH

In the aftermath of the Boston bombing, Davis said investigators sifted through thousands of photos to authenticate them because the public used Photoshop to doctor images.

"They photoshopped a suspicious person on a roof near the attacks and photoshopped a bag at the attack site in another photograph," he said. "These edited photos added an additional challenge, necessitating us to verify and rule out fakes from the public, complicating the monumental tasks already at hand."

And that's technology that existed 10 years ago.

Now, "deepfakes" use AI to create realistic, false images of people and replicate their voices. The technology is already being used in ransom scams and other schemes.

ARE YOU READY FOR AI VOICE CLONING ON YOUR PHONE?

"These ‘deepfakes,’ when used to interfere or disrupt an investigation, pose a distinct challenge to law enforcement that Congress and legislation must anticipate and prepare for," Davis said.

"Laws and regulations need to be formulated to safeguard this profound technology advancement as it continues to expand. Nefarious use of AI presents a clear and present danger to the safety of the American public."

"Technology will save lives," Davis said. But "as new technology becomes available to law enforcement, it is also available to criminals and terrorists."

Davis said the private sector is already using the latest AI tech, but policing "still lags woefully" behind, in part because of a lack of resources and information. (Reuters / Dado Ruvic / Illustration)

Davis said the private sector is already using the latest AI tech, but policing "still lags woefully" behind, in part because of a lack of resources and information.

But there's also a general hesitancy among law enforcement to implement "controversial techniques," the former Boston commissioner said.

That's why, he argues, it's vital that government officials get involved to provide a framework for "acceptable police procedures."

But at this point, AI's technological advances appear to be outpacing congressional debate, and the White House hasn't publicly discussed the topic much since it released the "Blueprint for an AI Bill of Rights" last October.

No comments: