FTC stakes out turf as top AI cop: ‘Prepared to use all our tools’

FTC says it's staffing up to meet challenge

The Federal Trade Commission (FTC) is making a play to be a key regulator of artificial intelligence (AI) systems, just as technology heavyweights and policymakers are clamoring for federal government oversight of AI applications.

Last week’s call for a moratorium on new AI development from tech giants like Elon Musk and Steve Wozniak kick-started a discussion about whether and how the government should step in and put guardrails up around potentially dangerous AI systems. Several lawmakers responded by saying a moratorium would be difficult to impose, leaving a huge gap between calls for action and the realities of how quickly Congress can act.

However, the FTC has made it clear over the last week that it is prepared to bridge that gap and take a stab at regulating emerging AI systems. The federal agency tasked with policing "deceptive or unfair business practices" says it has a dog in this fight and is building up a capacity to take on the threats that AI poses to wary consumers.

In late March, an FTC attorney said "chatbots" that can be used to mimic human language in text and videos can be used as a tool to develop fake and misleading products, such as spear-phishing emails, fake websites and posts, and fake consumer reviews that are aimed at fooling consumers. Michael Atleson, a lawyer for the FTC’s Division of Advertising Practices, indicated that the FTC is watching these developments closely for possible violations of consumer protection law – either by AI developers or companies that use AI products.

AI EXPERT IN CONGRESS WARNS AGAINST RUSH TO REGULATION: ‘WE’RE NOT THERE YET'

The Federal Trade Commission, led by Chair Lina Khan, is staking out a claim to regulate artificial intelligence products that could be used to deceive customers. (Al Drago / Bloomberg via Getty Images / File)

"The FTC Act’s prohibition on deceptive or unfair conduct can apply if you make, sell, or use a tool that is effectively designed to deceive – even if that’s not its intended or sole purpose," he warned in a post on the FTC site.

That warning was issued just before the call from dozens of notable tech luminaries to pause the further development of OpenAI’s ChatGPT, a new version of which was released last month. Their letter called for a pause in any development beyond OpenAI’s GPT-4 iteration amid fears it was being developed faster than anyone can think about how it should be regulated.

Atleson said companies developing or using this kind of AI system need to consider whether they "should even be making or selling it" based on its ability to be misused for fraudulent purposes.

"Then ask yourself whether such risks are high enough that you shouldn’t offer the product at all," he said.

Companies also need to worry about whether they are mitigating the risks that AI might be used to defraud customers and whether users of AI chatbots are being used to mislead customers.

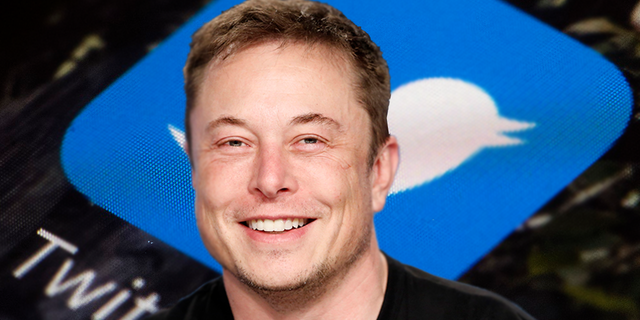

ELON MUSK'S WARNINGS ABOUT AI RESEARCH FOLLOWED MONTHS-LONG BATTLE AGAINST ‘WOKE’ AI

SpaceX founder Elon Musk is one of several tech luminaries who have called for a pause in developing next-generation AI chatbots. (AP Photo / Matt Rourke | Reuters / Joe Skipper)

"Celebrity deepfakes are already common, for example, and have been popping up in ads," Atleson said. "We’ve previously warned companies that misleading consumers via doppelgangers, such as fake dating profiles, phony followers, deepfakes or chatbots, could result – and in fact have resulted – in FTC enforcement actions."

Atleson issued a similar warning in February when he emphasized a series of problems surrounding chatbots. Among other things, he warned that AI development companies must guard against "exaggerating what your AI product can do" as well as the potential risks of putting a new AI product on the market.

"If something goes wrong – maybe it fails or yields biased results – you can’t just blame a third-party developer of the technology," he said. "And you can’t say you’re not responsible because that technology is a ‘black box’ you can’t understand or didn’t know how to test."

He also warned more broadly that "AI" has become a hot marketing term, and developers need to be careful about "overusing and abusing" promises related to AI just to make a sale.

ELON MUSK, APPLE CO-FOUNDER, OTHER TECH EXPERTS CALL FOR PAUSE ON ‘GIANT AI EXPERIMENTS’: ‘DANGEROUS RACE’

"Marketers should know that – for FTC enforcement purposes – false or unsubstantiated claims about a product’s efficacy are our bread and butter," he said.

Over the weekend, another FTC official said the agency is building up capacity to handle consumer complaints related to AI. Samuel Levine, director of the FTC’s Bureau of Consumer Protection, said the FTC has already brought cases related to AI and is "engaged in relevant rulemaking and market studies" on the issue.

"Today, this experience, along with our flexible authority and deep bench of talent, are allowing us to deliver clear, timely warnings around the risks posed by this technology," he said.

"The FTC welcomes innovation, but being innovative is not a license to be reckless," Levine added. "We are prepared to use all our tools, including enforcement, to challenge harmful practices in this area."

Rep. Jay Obernolte, R-Calif., has warned that Congress needs to first understand the dangers of AI before regulating it, though the FTC seems prepared to regulate aspects of AI related to consumer protection. (Tom Williams / CQ-Roll Call Inc. via Getty Images / File)

The FTC is recruiting top technologists to the agency and is working with partners in Europe, academics and others to stay current.

It's not immediately clear how Congress might react to aggressive FTC oversight of AI. The chair of the FTC, Lina Khan, has been criticized by conservatives recently for her demand that Twitter hand over a list of reporters who were involved in the "Twitter Files," which showed the company was coordinating with federal officials on censoring some Twitter content.

At the same time, however, many congressional Republicans appear likely to support some sort of government oversight of AI over fears that Silicon Valley developers are, for example, inserting left-leaning guardrails into how ChatGPT answers questions.

One early test of the FTC’s capacity to cope with the growing interest in regulating AI is a call from the Center for AI and Digital Policy (CAIDP) to halt any further development of ChatGPT and require independent assessments of the AI chat tool before further releases. CAIDP, which is aimed at ensuring AI promotes "broad social inclusion based on fundamental rights, democratic institutions and the rule of law," argued in its submission to the FTC that ChatGPT is "biased, deceptive and a risk to privacy and public safety."

No comments: